We’ve done dirigibles, jets, battle ships and steam engines. Today, Scott Locklin introduces us to a crazy machine they called SAGE.

While cold war jets are an old interest of mine, almost everything built to fight the cold war fascinates me. All ages are characterized by madness; only a few have that madness captured in physical objects. Consider the largest computer ever built: the “Semi-Automatic Ground Environment” or SAGE system.

The SAGE system was designed to solve a data fusion problem. Radar installations across North America kept watch against Soviet bombers. These needed to be networked together and coordinated with air defense missiles and interceptors. Seems simple, right? In the 1950s and 1960s, this was not simple. The country is big; hundreds of radar stations and sensors needed to be integrated. It wasn’t as easy as it was in England in WW-2, when enemy aircraft location was plotted by hand on maps as the radar data came in: North America is much larger, and the planes traveled much faster in the 50s and 60s. No group of people could really make the decisions in time to mount an effective defense. You needed some kind of computer to make the decisions.

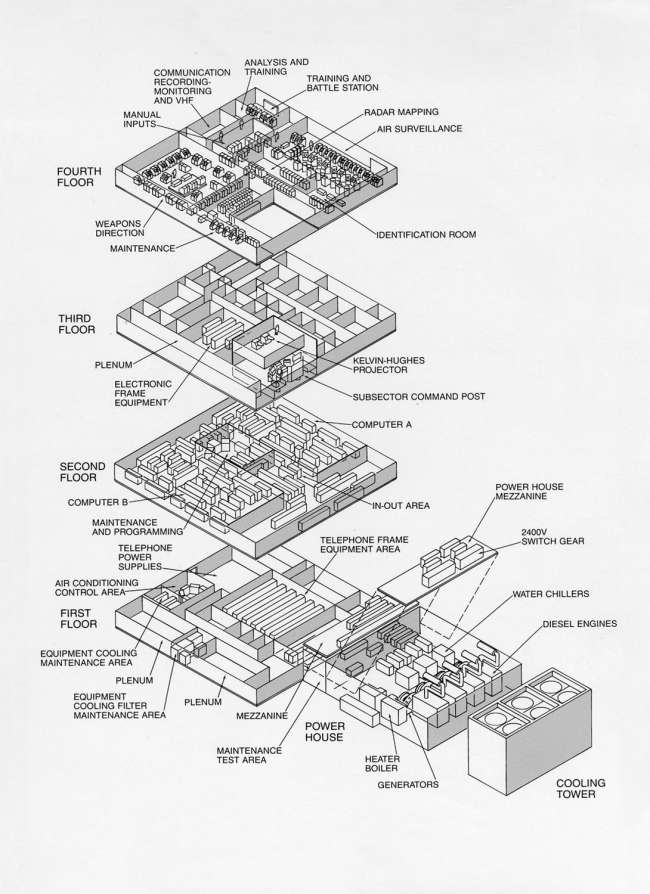

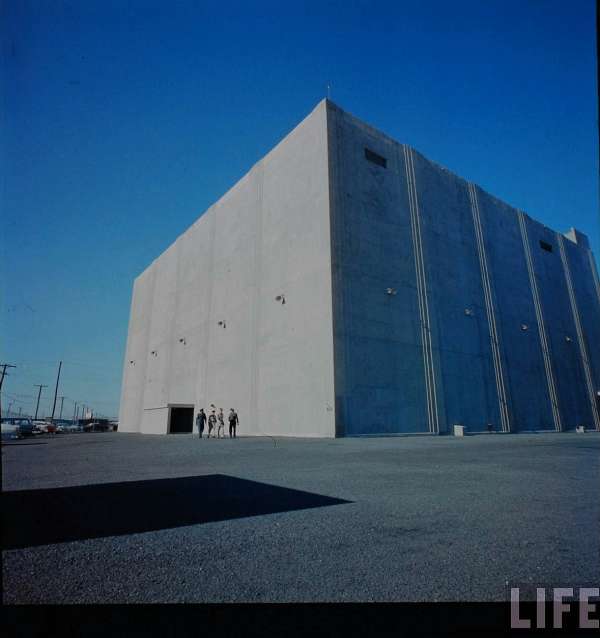

These giant electric brains took up an acre or so of real estate, and were encased in huge windowless concrete pillboxes all over the country (top).

The SAGE system had many firsts: it was the first nation wide networked computer system. While it used special leased telephone lines and some of the first modems (at a blistering 1300 baud), it was effectively the internet, long before the internet. It was the first to use CRT screens. The first to use a “touch screen interface” via the use of light pens on the CRT. It was the first to use magnetic core memory. It was the first real time, high availability computer system. It was the first computer system to use time sharing. Many people attribute the genesis of computer programming as a profession to the SAGE system. Modern air traffic control, and computer booking systems of course, descend from the SAGE system.

Each of the 27 computers that made up the system was a dual core 32 bit CPU made of 60,000 vacuum tubes 175,000 diodes, and 12,000 of those newfangled transistors. Memory: 256k of magnetic core RAM (invented for this project) clocking in every 6 microseconds. These things weighed 300 tons, consumed 3 megawatts of electrical power and ran a blistering 75,000 operations per second. The dual cores weren’t used for multiprocessing. One was kept on hot standby in case the other failed. Since our grandfathers knew about fault tolerance, they had a system to replace the tubes before they burned out: downtime was typically only a couple hours a year.

Each one drove 50 to 150 GUI workstations, and interacted with more than a hundred radars, interceptors and missile batteries. Remember that the next time you whine that your computer ain’t fast enough. No. This thing is less powerful than even a shitty cell phone (the 386 was probably approximately equivalent), and it did significantly more than your PC does. Each one was capable of coordinating the air defense of the entire North American continent.

It is worth pointing out that these machines not only ran all that equipment, and dealt with all that data, they also guided interceptors to their target locations. The F-106 and F-102 could be directly controlled by the SAGE system after takeoff. We think of “drones” as the latest and greatest newfangled thing in warfare: they have actually existed for a very long time. In many ways the SAGE system was more impressive than, say, the Predator system.

Another interesting piece of the SAGE system was the BOMARC missile system. The BOMARC (made by Boeing and the Michigan Aeronautic Research Center -which no longer exists) was primarily ramjet powered, and carried either a small nuke, or a half ton of conventional explosives. It was entirely dependent on the SAGE system for guidance to target. It was also incredibly stupid and dangerous: the original rocket boosters used hypergolic fuels, and would occasionally spectacularly explode in their silos, spreading dangerous plutonium around.

The SAGE system started running in 1958, and didn’t stop until 1984. Was it necessary? Like many interesting cold war artifacts, SAGE was more or less made obsolete by missiles around the time it deployed. While it did cost around $90 billion in 2013 dollars, it also was responsible for a good fraction of the technological things we now take for granted. Only barbarians do not remember their history, so anyone involved in modern technological projects should study it for lessons in engineering practice on long term and large scale projects.

First lesson I take from the SAGE system: solving the right problem. SAGE solved an important problem, that of air defense from enemy aircraft. It did so beautifully. The problem was, by the time it was deployed, bomber attack was a secondary issue: the primary threat was ballistic missiles. The US probably needed something like it anyway, but it is worth noticing that long-term, large-scale projects could very well be made redundant by deploy time.

Second lesson I take from the SAGE system: assemble a team that knows how to solve similar problems. MIT already had a computer on hand which constituted half of the solution, so it made perfect sense to scale up some parts of MIT into the MITRE corporation and Lincoln labs. Let’s say the government got a bug up its ass to spend $100 billion building a computer with the same capabilities as a dog’s brain, or one that could program itself. What institution would be most qualified to do this? I can’t answer this question, because pretty much nobody knows how to do something similar.

The third and final lesson I’ll take from the development of SAGE: break down the problem into manageable pieces, and solve them. They used the technology on hand in the 50s; vacuum tubes, telephone lines and CRTs. They didn’t postulate any significant breakthroughs in order to get ‘er done. They made do with what they knew was possible As such, the path to success was obvious. Engineering genius came along the way. If you don’t have manageable pieces, you don’t have a real project: you have a wish. What are the manageable pieces needed to make “nanotech” or controlled nuclear fusion a reality? What are the manageable pieces needed to make quantum computing or deriving all electrical power from the sun a reality? I don’t know, and I don’t know of anybody else who does: therefore, such things do not count as legitimate long term projects.

“One of the outstanding things… was the esprit de corps—the spirit that pervaded the operation. Everyone had a sense of purpose—a sense of doing something important. People felt the pressure and had the desire to solve the air defense problem, although there was often disagreement as to how to achieve that end. Energy was directed more toward solving individual problems, such as making a workable high-speed memory or a useable data link, than it was toward solving the problem of the value of the finished product. It was an engineer’s dream.” –John F. Jacobs Former Senior Vice President The MITRE Corporation

Insanely fascinating SAGE manuals found here:

Are these huge concrete bunkers still standing and full of enormous immovable computers quietly gathering dust I wonder?

Ironic that now I have to deal with a compueter programme called SAGE, which is just an annoying accounting software system.

“the 386 was probably approximately equivalent”

At 65 thousand operations per second? I thought that the VAX 11/78 claimed a million instructions per second, though DEC later switched to reporting VAX units of performance (VUPs) rather than millions of instruction per second (MIPs). And for raw crunching, that old VAX wasn’t up with the 386. Of course, it didn’t have to run Word or Solitaire.

I believe that the US military went into Gulf War I with a lot of embedded computers that were 286 or 386 under the hood but emulating old minicomputers like the DG Nova.

Interesting point in your last para about the need for projects to have manageable pieces to achieve technological breakthroughs. Is this something of an iron law I wonder?

Are there any massive state-led tech projects going on at the moment which will have a similar impact on technological development more generally? I can think of CERN but nothing else. Should we be worried about this? I mean where is the next internet going to come from?

Incidentally, I attended a design/tech conference last year whose keynote speaker was James Burke (well-known over here if not over in the US). He had imbibed very deeply of the nanotech Kool Aid. His advice was tighten your seatbelts as it’s all just about to kick off. We’d be making everything we need in our garages within a couple of decades.